As noted in an earlier blog post, I’m writing an article on Server Clustering for Server Management Magazine. In the article I discuss setting up of an NLB cluster. This blog post shows the steps in more detail, including screen shots of each step.

Creating an NLB Cluster

There are three key steps to creating an NLB cluster in Windows Server 2008:

- Install the NLB Feature into Server 2008 on each host that you will add to an NLB cluster.

- Use the New Cluster wizard to create the cluster and add the first host.

- Use the Add Host wizard to add one ore more nodes to your cluster

You also have to setup the application that you want to cluster. For the article I created a very simple ASP.NET application that I documented in an earlier blog post.

Installing NLB in Server 2008

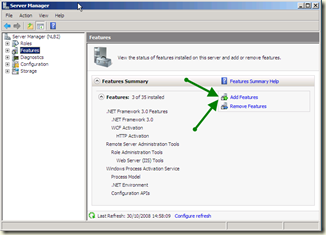

This is extremely easy. First you run Server Manager, and select Features from the tree and click on Add Features:

From the Add Features wizard just select Network Load Balancing and click Next:

Finally from the Confirm Installation Selections click Install:

After a few seconds, NLB will be installed on the first host. You need to repeat this installation on each host you plan to add to your NLB Cluster. Installing the NLB feature does NOT require a reboot, but removing it does.

Creating your NLB Cluster

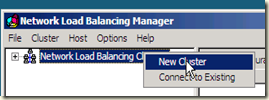

Once NLB is installed, you create your cluster by first bringing up the NLB Cluster Manager:

The right click the Network Load Balancing cluster node in the left pane, and select New Cluster:

This brings up the first page of the New Cluster Wizard – here you select the first member of your cluster by adding the IP address or DNS name into the Host box:

This shows you the interfaces on this host that you can use for configuring a new cluster. Chose the interface and click Next:

This brings up the Host Parameters page, where you enter the IP address to be used for the cluster for the cluster, then you click next:

This brings up the Cluster IP address page. Here you add the address used by clients to connect to nodes in the cluster:

After adding the IP address, and clicking next, the New Cluster wizard displays the Cluster Parameters where you specify the cluster IP configuration and the cluster operation mode (and click next):

Finally, the wizard displays the port rules. In this case, we specify the cluster shoudl handle TCP Port 80 (and click on Finish):

The Wizard then does the necessary configuration, resulting in a single node NLB cluster, shown in the NLB manager like this:

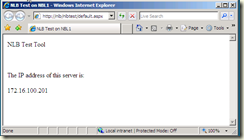

At this point, you can navigate to the cluster to see the application running:

Adding Additional Nodes

A single node cluster is not much value, so you next need to add an additional node (or nodes). To add a node, you right click your newly created cluster and select Add Host to Cluster:

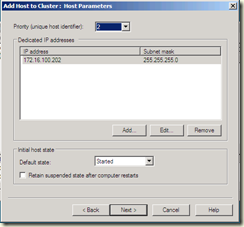

This brings up the Add Host to Cluster: Connect page where you specify the host to add, and the interface used in the cluster:

Next you specify the new host’s parameters:

Then you get to update, in needed the cluster’s port rules:

Clicking next completes the wizard, resulting in a 2nd host in the cluster. As seen by NLB Manager:

Re navigating in your browser to the cluster may (or may not) result in a different page. Whilst running the wizard to create the screen shots shown here, this did change:

So – it’s simple and easy to create an NLB cluster in Server 2008!