Here are the resources I’ve found so far. I am updating this regularly as I find more stuff!

Last Update: 13:15 18September 2010

Background Information

Here is some background information on Lync Server 2010 and Lync 2010:

- Lync Home Page This is Lync’s home page on Microsoft.com. http://www.microsoft.com/en-us/lync/default.aspx

- Lync Technologies This pages enables you to explore the technologies and capabilities of Lync

- CS ‘14’ Overview Presentation This deck is the TechEd deck updated by Joachim Farla an OCS MVP from The Netherlands. 16 slides so it’s brief. A good starting point to understanding Lync 2010.

- UC Open Interoperability Program – a program that enables MS to certify Sip-PSTN Gateways, IP-PBXs and SIP Trunking Services. http://technet.microsoft.com/en-us/office/ocs/bb735838.aspx

- Microsoft Lync Server 2010 RC Evaluation Resources – a nice page from TechNet that provides lots of background information. http://www.microsoft.com/en-us/lync/reviews-news.aspx

- Lync Tech Center This is a TechNet sub-site with technical information from MS on Lync. http://technet.microsoft.com/en-us/lync/default.aspx

- Microsoft Lync Solution Centre – a Microsoft support sub-site on Lync. http://support.microsoft.com/ph/924#tab0

Lync Software Components

Microsoft has released some product bits of the product as separate downloads:

- Microsoft Lync Server 2010 RC – this is the server code needed to deploy a test version of Lync Server in your environment. Get the server DVD ISO image from here: http://www.microsoft.com/downloads/en/details.aspx?FamilyID=29366ba5-498f-4d21-bc3e-0b4e8ba58fb1&utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+MicrosoftDownloadCenter+%28Microsoft+Download+Center%29#tm. This ISO contains the code to run Lync Server (64-bit only) and the Lync client (32-bit and 64-bit).

- Microsoft Lync 2010 Atendee RC (admin install) – this is the client code that effectively replaces the OCS 2007 Live Meeting Console (and is both separate to and independent of the Lync client) - http://www.microsoft.com/downloads/en/details.aspx?FamilyID=1772A5AD-9688-4861-8387-EC30411BF455

- Microsoft Lync 2010 Attendee RC (user level install) - http://www.microsoft.com/downloads/en/details.aspx?FamilyID=68A3CA04-A058-4E47-98EA-9E9AF7EBD6E3

- Lync 2010 Unified Communications Managed API (UCMA) 3.0 Systems Development Kit – the client side API set enabling integration and extension of Lynx experiences. This is a managed code set of APIs. Get it at: http://www.microsoft.com/downloads/en/details.aspx?FamilyID=d98f0bf7-c82c-47f5-9f73-be3edbf30438&utm_source=feedburner&utm_medium=twitter&utm_campaign=Feed%3A+MicrosoftDownloadCenter+%28Microsoft+Download+Center%29#tm

Microsoft Blogs

- Lync Server Team Group blog – this is from the Unified Communications team. http://blogs.technet.com/b/uc/

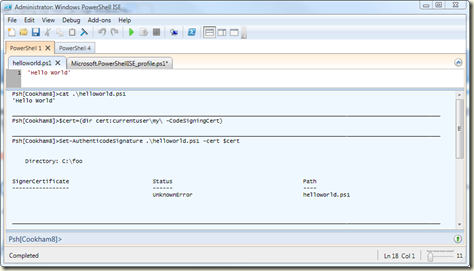

- Lync Server PowerShell Team’s Blog – A look at what’s happening with Lync and PowerShell. A great discussion of PowerShell and Lync. http://blogs.technet.com/b/csps/

- Jens Trier Rasmussen – Jens is a Senior Program Manager in the Early Deployment & Readiness team at Microsoft and a very knowledgeable guy. http://blogs.technet.com/b/jenstr/about.aspx

Non MS Blog Posts

- Lync Server 2010 RC Deployment - http://blog.schertz.name/2010/09/lync2010rc-deployment-part1/

- Step-by-step Microsoft Lync 2010 Consolidated Standard Server Install Guide - http://imaucblog.com/archive/2010/09/15/step-by-step-microsoft-lync-2010-consolidated-standard-server-install-guide/

Podcasts

- Cezar Ungureanasu and Thomas Lee on Lync Server 2010 and PowerShell – superstar Cezar Ungureanasu (Lync Server PowerShell PM) and Thomas Lee talk about Lync Server’s PowerShell Interface. http://blogs.technet.com/b/csps/archive/2010/09/09/podcastcezarthomasaug2010.aspx

Planning Tools

- Microsoft Lync Server 2010 Planning Tool RC – this is a great tool to help you design your infrastructure prior to deployment. http://www.microsoft.com/downloads/en/details.aspx?FamilyID=bcd64040-40c4-4714-9e68-c649785cc43a&displaylang=en

- Lync and Lync Server System Requirements – an official look at what’s required to run Lync Server 2010 and the Lync clients. Get this at: http://www.microsoft.com/downloads/en/details.aspx?FamilyID=634a3616-4199-4d51-88ee-618e72d91b7c&displaylang=en

Product Documentation

Microsoft has released several white papers on the http://technet.microsoft.com/en-us/lync/default.aspx site, including:

- Determining Your Infrastructure Requirements for Lync Server 2010 (RC).doc - Download

- Planning for Archiving Lync Server 2010 (RC).doc - Download

- Planning for Clients and Devices Lync Server 2010 (RC).doc - Download

- Planning for Enterprise Voice Lync Server 2010 (RC).doc - Download

- Planning for External User Access Lync Server 2010 (RC).doc - Download

- Planning for IM and Conferencing Lync Server 2010 (RC).doc - Download

- Planning for Other Features Lync Server 2010 (RC).doc - Download

- Planning for Your Organization Lync Server 2010 (RC).doc - Download

Pricing and Licensing

Lync Server 2010 follows a Server/Client Access License (CAL) model whereby a Lync Server 2010 license is required for each operating system environment running Lync Server 2010 and a CAL is required for each user or device accessing the Lync Server.

- Pricing for Lync Server and Client – this page sets out the details of licensing for Lync Server and client. Pricing on the page is ‘estimated’ – in other words see your reseller as prices will vary from the 'official’ costs shown here.

Support

At present, there’s no formal support for Lync 2010. At present, the two places where you can find more information are Microsoft’s OCS 2007 Forums:

- For details on Lync Server 2010 RC – see the Office Communications Forums at: http://social.technet.microsoft.com/Forums/en-US/category/ocs

- For details on Lync 2010 RC Clients, see the Office Communicator Forums at: http://social.microsoft.com/Forums/en-US/category/officecommunicator/

Webcasts – CS14 at TechEd

Microsoft presented CS14 topics at TechEd North America earlier in 2010. The presentations and slide decks are all available for download and use. These presentations talk about CS’14’ – but aside from the branding, the details are the same!

- UNC205 – CS14: Transforming the Way People Communicate – Gudeep Singh Pal’s OCS 14 keynote - http://www.msteched.com/2010/NorthAmerica/UNC205

- UNC206 – Microsoft Communications Online: Present and future - http://www.msteched.com/2010/NorthAmerica/UNC206

- UNC208 – CS14 Devices - http://www.msteched.com/2010/NorthAmerica/UNC208

- UNC311 – CS14 Architecture - http://www.msteched.com/2010/NorthAmerica/UNC311

- UNC312 – CS14 Network Considerations - http://www.msteched.com/2010/NorthAmerica/UNC312/

- UNC313 – CS14 Voice Architecture and Planning - http://www.msteched.com/2010/NorthAmerica/UNC313/

- UNC314 – CS14 Voice Deployment - http://www.msteched.com/2010/NorthAmerica/UNC314/

- UNC315 – CS14 Setup and Deployment - http://www.msteched.com/2010/NorthAmerica/UNC315/

- UNC316 – CS14 Monitoring and Reporting - http://www.msteched.com/2010/NorthAmerica/UNC316/

- UNC317 – CS14 Management Experience - http://www.msteched.com/2010/NorthAmerica/UNC317/

- UNC318 – CS14 – Conferencing and Backend - http://www.msteched.com/2010/NorthAmerica/UNC321

- UNC321 – Interoperability of IM, Presence, A/V and Voice - http://www.msteched.com/2010/NorthAmerica/UNC321

- UNC322 – The New Communicator 14 Platform - http://www.msteched.com/2010/NorthAmerica/UNC322

- UNC401 – Advanced SIP Solutions and UCMA - http://www.msteched.com/2010/NorthAmerica/UNC401

Press and Release PR Coverage

As often happens, much the industry found out about Lync Server’s public debut from non-Microsoft sources, quickly followed by the MS presentation. Here is some of the press background if you are interested.

- Mary Jo Foley’s ‘announcement’ that Lync 2010 RC has shipped: http://www.zdnet.com/blog/microsoft/microsoft-delivers-near-final-test-build-of-lync-pbx-competitor/7360

- Microsoft's Official Press release: http://www.microsoft.com/Presspass/press/2010/sep10/LyncPR.mspx.

- Introducing Microsoft Lync, the next OCS – the product team’s blog post that ‘announces’ Lync. http://blogs.technet.com/b/uc/archive/2010/09/13/introducing-microsoft-lync-the-next-ocs.aspx

- Latest Coverage This page is from MS setting out Lync related press articles. http://www.microsoft.com/en-us/lync/reviews-news.aspx

Email me any changes or updates and I’ll try to keep this list up to date!

[UPDATES TO ORIGINAL POSTING]

- 15 Sept 2010 - Added CS4 web casts, Added Podcast section and did some minor re-org of the list itself. Also added update list

- 17 Sept 2010 – Separated out the Press/PR stuff from basic tech info. Added Lab deployment guide reference.

- 18 Sept 2010 – fixed missing link to Thomas/Cezar's podcast, added Worked Deployment Guide from Jeff Schertz.

- 4 Oct 2010 – added details on licensing and links to planning documentation.